Research

My research focuses on distributed systems – using a combination of new algorithms and systems techniques to build practical systems that are faster, more reliable, easier to program, and more secure.

Current projects

We are rethinking the design of distributed systems for the datacenter environment. Traditional distributed systems rely on algorithms designed independently from the underlying network, making worst-case assumptions about its behavior. The datacenter network presents a new opportunity, particularly as programmable devices become widespread. It is now possible to co-design distributed systems with new network-layer primitives.

We are exploring applications of this technique in the Prometheus project; many of the systems described below are part of this project.

Pegasus is a new storage architecture that leverages programmable switch ASICs to dynamically balance load across storage servers. It selectively replicates the most popular data objects to distribute load, and builds a novel in-network coherence direc- tory in the switch data plane to manage these replicated objects. This allows Pegasus to achieve load-aware forwarding and dynamic rebalancing, while still guaranteeing data coherence. Pegasus improves the 99% tail latency of a distributed key-value store by more than 95%, and yields up to a 9x throughput improvement.

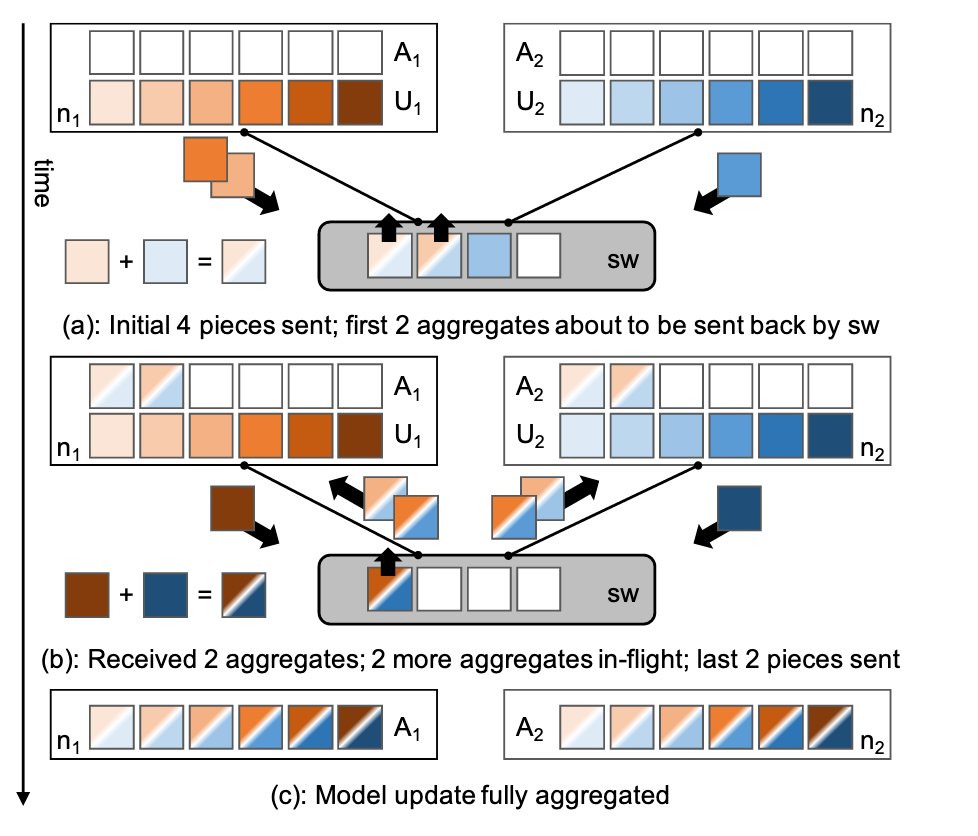

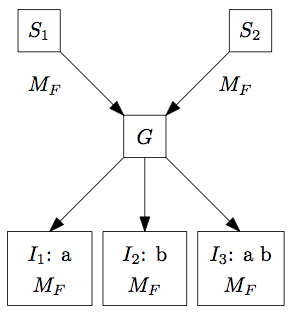

Distributed machine learning training workloads now find that the network can be a bottleneck, as a result of both increasing scale and single-node performance. We accelerate distributed parallel training by designing a communication primitive that uses a programmable switch dataplane to execute a key step of the training process. SwitchML reduces the volume of exchanged data by aggregating the model updates from multiple workers in the network.

Distributed transactional databases use a combination of costly atomic commitment and replication protocols to ensure fault tolerance and serializability of updates. Eris takes a very different approach. It moves a core piece of concurrency control functionality into the datacenter network itself. The result is that Eris avoids both replication and transaction coordination overhead: it processes a large class of distributed transactions in a single round-trip from the client to the storage system without any explicit coordination between shards or replicas.

Distributed systems are traditionally designed independently from the underlying network, making worst-case assumptions about its behavior. Such an approach is well-suited for the Internet, where one cannot predict what paths messages might take or what might happen to them along the way. However, many distributed applications are today deployed in datacenters, where the network is more reliable, predictable, and extensible. We argue that in these environments, it is possible to co-design distributed systems with their network layer, and doing so can offer substantial benefits.

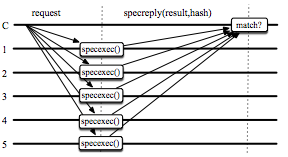

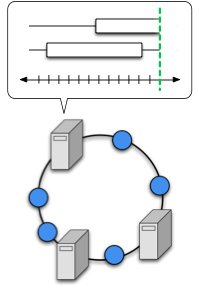

Our recent work uses this approach to improve state machine replication protocols, which are important both as the standard mechanism for ensuring availability of critical datacenter services and as the basis for distributed storage systems. The first project uses network-level techniques to provide a Mostly-Ordered Multicast primitive (MOM) with a best-effort ordering property for concurrent multicast operations. We use this primitive to build Speculative Paxos, a new replication protocol that relies on the network to order requests in the normal case, while still remaining correct if messages are delivered out of order. By leveraging the datacenter network properties, Speculative Paxos can provide substantially higher throughput and lower latency than the standard Paxos protocol.

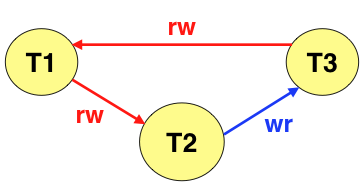

Transactional Application Protocol for Inconsistent Replication, or TAPIR, is a new protocol for linearizable distributed transactions built atop a new replication protocol that provides no consistency guarantees. TAPIR eliminates expensive coordination from the replication layer, yet provides the same transaction model and consistency semantics as existing systems like Spanner. It can commit transactions in a single round-trip, greatly improving both latency and throughput relative to prior systems.

Modern datacenter applications struggle with the need to access thousands of servers while still providing a fast response time to the user. In these situations, the user’s overall request is not complete until the slowest of the subrequests has completed, making it important to design network services that offer not just low latency but predictable latency.

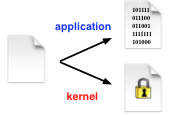

We are developing techniques for building systems that offer predictable response time. At the operating system level, we have conducted an extensive measurement study to identify factors that can cause even a completely deterministic application to have occasional requests that take several orders of magnitude longer than expected. Using a set of modifications to the kernel scheduler, network stack, and application architecture, we can reduce the tail latency to within a few percent of optimal.

Arrakis is a radical restructuring of the traditional operating system inspired by a concept from high-speed network routers: we split the operating system into a separate control plane and data plane. Leveraging recent virtualized I/O hardware technology for network and storage device access, we move the operating system off the I/O data path by providing safe, direct access to hardware devices at user level. The OS kernel is needed only on the data plane, to configure which data paths are allowed and what resource limits should be enforced in hardware.

Today’s mobile devices sense, collect, and store enormous amounts of personal information, while our favorite applications let us share that information with family and friends. We trust these systems and applications with our sensitive data and expect them to maintain its privacy. As we have repeatedly witnessed, this trust is often violated due to bugs, confusing privacy controls, or an application’s desire to monetize personal data.

Agate is a trusted distributed runtime system that: (1) gives users the power to define privacy policies for their data, and (2) enforces those policies without needing to trust applications or their programmers. Agate combines aspects of access control and information flow control to ensure that applications executing across mobile platforms and cloud servers meet our privacy expectations.

Older projects

TxCache is a transactional distributed application-level cache. Application-level caches (e.g. memcached) are a popular option for scaling database-driven websites. TxCache addresses two problems with existing caches that make them difficult to integrate with applications. It ensures that any data seen within a transaction, whether from the cache or the database, reflects a consistent snapshot of the database. It also offers a simple programming model, where application developers simply designate certain functions as cacheable. The system automatically caches their results and keeps them up to date as the underlying data changes, eliminating an entire class of cache management errors.

TxCache can substantially increase the performance of web applications, and achieves performance comparable to that of a non-transactional cache, showing that consistency does not have to come at the price of performance.

PostgreSQL 9.1 introduces a new serializable isolation level that is based on Serializable Snapshot Isolation (SSI) rather than the traditional locking mechanisms used in most other databases. This allows it to provide true serializability without blocking, significantly improving performance for read-heavy workloads.

This the first implementation of SSI in a production database release as well as the first in a database that did not previously have a lock-based serializable isolation level. Our VLDB paper discusses some of the challenges that resulted, and several new extensions to SSI that improve performance for read-only transactions.

Aeolus is a platform for building secure distributed applications using the decentralized information flow control model. Aeolus differs from previous information flow control systems in that it is intended to be easier to understand and use. It incorporates a new, simpler security model that combines principal-based authority management with thread-granularity information flow tracking, and provides new abstractions that support common design patterns in secure application design. Aeolus is implemented as a Java library, using a new lightweight isolation mechanism to support controlled sharing between threads.

Census is a platform for building large-scale distributed applications that provides a membership service and a multicast mechanism. The membership service provides every node with a consistent view of the system membership, which may be global or partitioned into location-based regions. Census distributes membership updates with low overhead, propagates changes promptly, and is resilient to both crashes and Byzantine failures.

Census is designed to provide this consistent membership abstraction at very large scale, e.g. multi-site datacenters. It does this using a novel multicast mechanism that is closely integrated with the membership service.

Commodity operating systems are complex and prone to compromise. Overshadow is a virtual-machine-based system for protecting the privacy and integrity of unmodified applications even when the OS they run on is completely compromised. Overshadow uses multi-shadowing, a new technique that uses the hypervisor to provide the application with a normal view of its resources, but the OS with an encrypted view. This allows the operating system to remain responsible for managing application resources, without being able to read or modify their contents, permitting a smaller trusted computing base.

Later work considered the security implications of malicious OSes, an analysis relevant not just to Overshadow but also to other systems that protect security-critical portions of applications from the OS. Previous work focused on CPU and memory isolation; our analysis instead examined higher-level semantic attacks, where malicious misbehavior by various OS components can undermine application security, and proposed an architecture for mitigating them.

Arpeggio is a peer-to-peer file-sharing network based on the Chord distributed hash table. It performs search queries based on file metadata efficiently by using a distributed keyword-set index, augmented with index-side filtering. Arpeggio also introduces new techniques for tracking the availability of data without inserting the data itself into the DHT, and for using information in the index to improve the availability of rare files.